Why Traditional SEO Dies in 2026: New AI Search Optimization Rules

AI search engine optimization is transforming the digital landscape at an unprecedented rate. In June 2025, AI referrals to top websites spiked 357% year-over-year, reaching 1.13 billion visits . This explosive growth is reshaping how we approach SEO strategies.

Traditional search patterns are rapidly shifting. As of November 2025, a staggering 60% of Search Engine Results Pages (SERPs) feature AI Overviews , while 60% of Google searches never leave the search engine results page . The ai impact on seo cannot be overstated when every minute, 5.9 million searches are processed on Google—adding up to 8.5 billion searches per day . Consequently, we need to completely rethink our ai seo strategy for this new reality.

In this article, we’ll explore why conventional SEO approaches are failing and how ai driven search engine optimization differs fundamentally from traditional methods. Furthermore, we’ll outline ai search engine optimization best practices that will help you thrive in 2026. Specifically, we’ll examine content structuring, authority building, and essential metrics to track as ai and the future of seo continue to evolve at breakneck speed.

Why Traditional SEO Fails in the Age of AI Search

Traditional SEO practices are rapidly becoming obsolete as AI fundamentally reshapes how search works. The stark reality is that marketers who cling to outdated optimization methods will see their online visibility evaporate.

Decline of keyword-first strategies in AI-driven SERPs

Keyword-centric approaches that once dominated ai search engine optimization are now delivering diminishing returns. Notably, the average Google search query consists of just 4.2 words, typically short and transactional like “best pizza Melbourne” [1]. In contrast, the average ChatGPT query stretches to 23 words – full sentences and complex questions that reflect conversational intent [1]. This represents a fundamental shift in search behavior.

Indeed, approximately 70% of AI search queries demonstrate completely different intent than traditional Google searches [1]. Users are no longer typing keywords; they’re having conversations. AI assistants like ChatGPT and Perplexity offer answers that feel concise, personalized, and ironically, more human than traditional search results [2].

First, AI prioritizes topical understanding over individual keywords. Second, it values context and conversational flow. As a result, optimization now requires comprehensive coverage of entire topic areas rather than targeting specific terms [3].

AI parsing vs. traditional page indexing

AI search engines function nothing like Google’s traditional crawlers. Rather than ranking pages based on keywords and links, AI systems build knowledge indices by scraping and processing information across multiple sources [1].

The difference is profound – traditional crawlers collect pages; AI crawlers extract knowledge. According to research, 52% of sources cited in AI search results aren’t even on Google’s first page [1]. Your perfectly optimized SEO strategy becomes virtually worthless in this new paradigm.

Additionally, AI systems extract chunks of content and combine them with information from other sources [3]. This means each section of your content needs to stand alone without requiring context from other parts of your page. Many AI crawlers also cannot execute JavaScript files [4], creating another technical hurdle for JavaScript-heavy websites.

AI impact on SEO visibility and traffic

The impact on traffic has been devastating for unprepared businesses. Studies show AI Overviews can cause a 15-64% decline in organic traffic, depending on industry and search type [5]. Approximately 60% of searches now yield no clicks at all as AI-generated answers satisfy users directly on the results page [5].

Even market leaders aren’t guaranteed visibility in AI-powered search. A brand’s own sites typically comprise only 5-10% of the sources that AI-search references [6]. Instead, AI pulls from diverse sources including forums, reviews, and other third-party content [6].

Despite these challenges, AI search traffic demonstrates significantly higher value. Visitors arriving via AI search are often further along in their buyer journey – ready to take action. Many companies report that up to 10% of their conversions now come from AI-driven search [5], with AI search visitors converting at rates 4.4 times higher than traditional organic search visitors [4].

The message is clear: ai driven search engine optimization requires fundamentally different approaches than traditional SEO. Businesses must adapt their ai seo strategy to maintain visibility in this new ecosystem or risk becoming invisible to an ever-growing segment of users.

How AI Search Engines Parse and Select Content

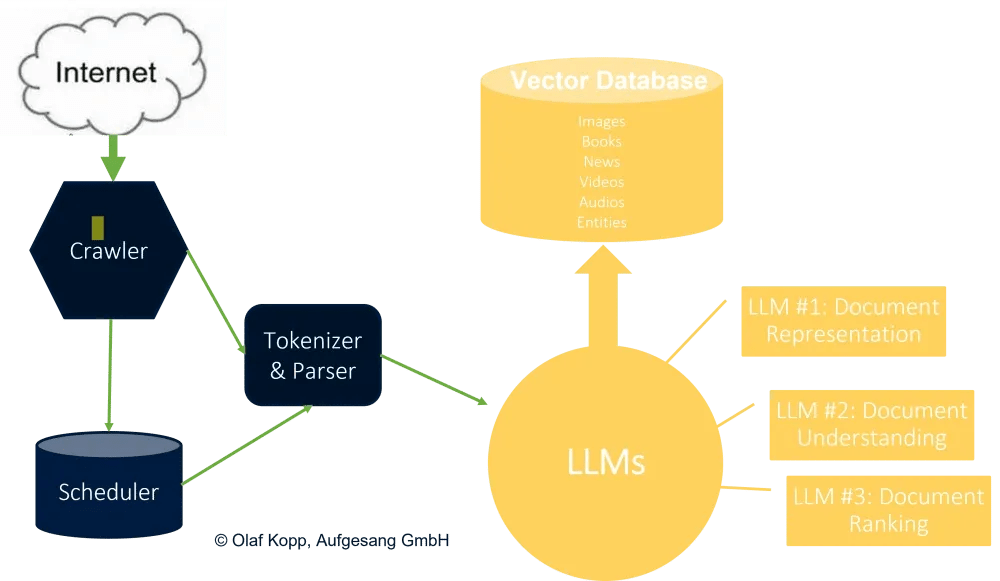

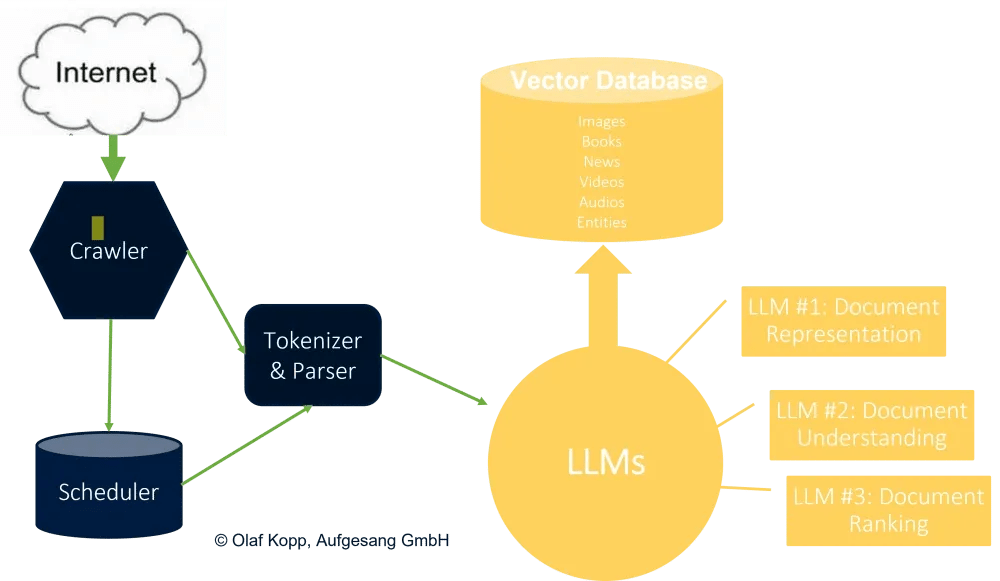

Image Source: Online Marketing Consulting

Understanding the mechanics of AI content processing reveals why traditional optimization approaches fall short. Unlike conventional search engines, AI systems approach your content in fundamentally different ways.

Modular content extraction in AI assistants

AI assistants don’t read pages from top to bottom like humans do. Instead, they break content into smaller, usable pieces through a process called parsing [7]. These modular pieces become the building blocks that get ranked and assembled into answers. Primarily, this modular approach helps AI systems efficiently retrieve the most relevant information when needed.

Different AI systems employ various extraction strategies based on several factors: content type, embedding model used, expected query complexity, and desired answer length [8]. This explains why AI search results often combine information from multiple sources—they’re extracting chunks of relevant content and assembling them into cohesive responses.

Layout-aware extraction methods use OCR technology and detection models to identify objects in documents, particularly tables, graphs, and charts [8]. This capability allows AI search engines to comprehend visual elements in your content, not just text.

Importance of semantic clarity and structured data

For effective ai search engine optimization, structured data has become essential. It provides a standardized format for classifying page content, helping search engines understand what elements like ingredients, cooking times, or product specifications actually mean [9].

Structured data creates what experts call a “content knowledge graph”—a data layer that defines your brand’s entities and relationships across content [10]. Through schema markup implementation, you tell machines:

- What entities exist on your page (people, products, services)

- How these entities relate to each other

- The context in which information should be understood

Google, Microsoft, and ChatGPT have all confirmed that structured data helps large language models better understand digital content [10]. In this new era of ai driven search engine optimization, “context, not content, is king” [10]. By translating your content into Schema.org markup, you’re essentially building a data foundation that AI can interpret accurately.

JSON-LD (Google’s preferred format) has emerged as the most flexible implementation method, allowing placement in a separate script tag without disrupting your HTML structure [11].

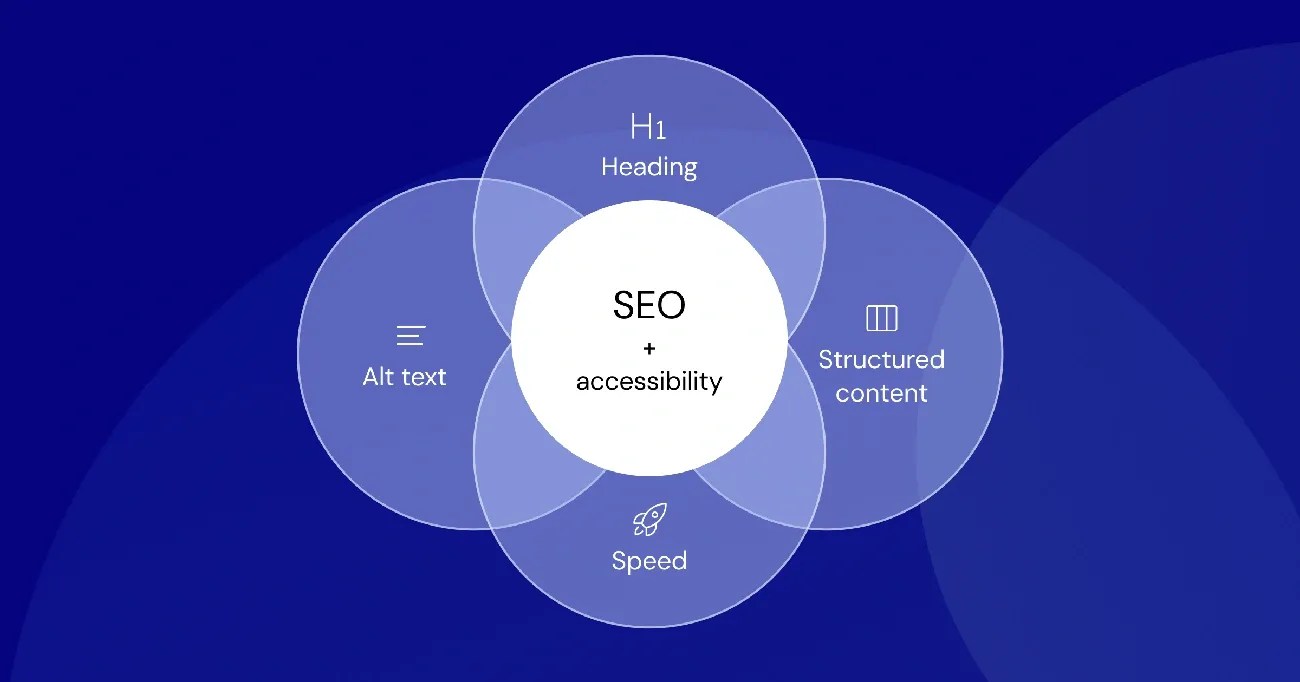

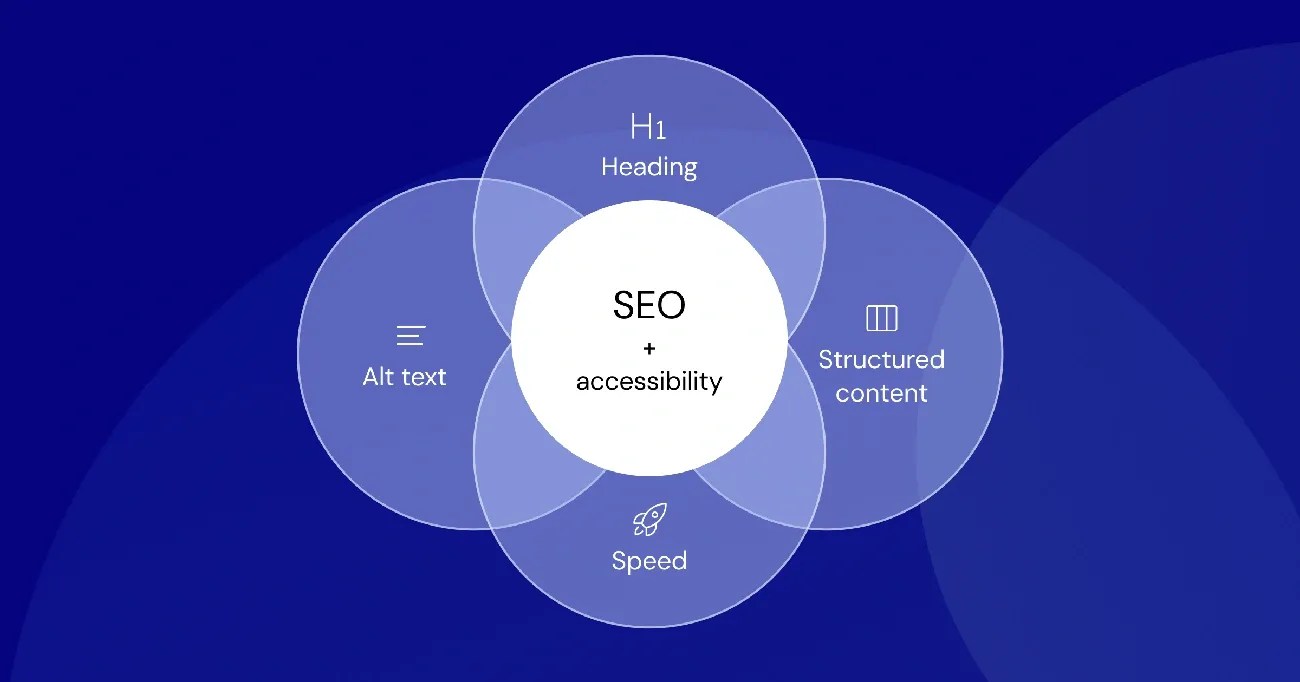

Role of H1, H2, and metadata in AI parsing

Headers function as critical signposts that guide AI through your content. They mark boundaries where one idea ends and another begins [7]. Moreover, your page title, description, and H1 tag serve as primary signals AI systems use to interpret your page’s purpose and scope [7].

Hierarchical heading structure (H1→H2→H3) creates what AI perceives as a “table of contents” for your content [12]. This hierarchy matters significantly—LLMs analyze heading structure to understand content organization. Pages with proper heading nesting are much easier for AI to parse than walls of unstructured text [1].

One cardinal rule for ai seo strategy: never skip heading levels [13]. Jumping from H1 to H3 confuses both readers and AI systems, disrupting the logical content flow. Additionally, metadata like page titles and descriptions should align closely with your H1 tag, creating consistent context signals [7]. This alignment between elements increases both discoverability and confidence signals for AI systems.

For ai search engine optimization best practices in 2026, focusing on these structural elements will likely yield better results than traditional keyword optimization alone.

AI Search Engine Optimization Best Practices for 2026

Image Source: Elementor

Successful ai search engine optimization in 2026 requires technical alignment with how AI systems extract and present information. Implementing these best practices will ensure your content remains visible in AI-powered search results.

Use of schema markup for FAQs, HowTos, and Products

Schema markup acts as a universal language that enables AI systems to accurately interpret your content. LocalBusiness, FAQ, and Review schema are particularly valuable since they tell Google’s AI precisely what your content means [14].

Schema markup creates a data layer defining your entities and relationships, making your content machine-readable without changing its appearance to users. Common schema types that boost AI visibility include:

- FAQ schema for question-answer content

- HowTo schema for step-by-step guides

- Product schema for e-commerce listings

- Article schema for blog posts and news

According to Schema.org data, approximately 45 million of the world’s 362.3 million registered domains use Schema.org markup—meaning only about 12.4% of websites leverage structured data [15]. This creates a significant competitive advantage for early adopters.

Creating snippable content blocks for AI Overviews

AI systems favor content that delivers answers upfront. For optimal ai seo strategy, structure information with clear headings followed immediately by concise answers. First, start with direct definitions before expanding on supporting context [16].

Turn priority pages into quotable sources by using short paragraphs, explicit headings, and direct, two-sentence answers at the top of each section [17]. Pages that already rank organically are more likely to be cited in AI Overviews, highlighting how solid SEO fundamentals and clear structure work together [17].

Optimizing for voice and conversational queries

Voice searches average 23 words compared to traditional 4.2-word queries [18]. Therefore, optimize for longer, conversational questions that mirror natural speech patterns. Implement FAQ-style formats at the bottom of content pages to provide voice assistants with clear extraction points [18].

The FAQ schema is particularly effective for voice search since it mirrors how real people ask questions. This structured format makes it easier for AI to find your answers when users ask specific questions [19].

Avoiding hidden content and PDF-only formats

AI search engines cannot properly interpret PDF documents since they lack semantic HTML structure. When companies publish product information exclusively as PDFs, they create what experts call “PDF invisibility” [3]. Hence, critical product specifications never appear in AI-generated vendor lists.

One polymer manufacturer with 320 product datasheets as PDFs estimated ~£3M in invisible annual pipeline opportunities across technical queries that AI couldn’t answer with their products [3]. Apromote recommends maintaining PDFs for download while creating structured web versions for AI interpretation to maximize visibility.

The solution isn’t PDF optimization but rather converting critical information to structured web content that AI systems can accurately parse and cite.

Building Authority Signals for AI-Driven Search

Authority signals determine whether AI search engines trust your content enough to cite it. Primarily, authority building for AI requires fundamentally different approaches compared to traditional SEO tactics.

E-E-A-T principles in AI SEO strategy

E-E-A-T stands for Experience, Expertise, Authoritativeness, and Trustworthiness—Google’s framework for evaluating content quality [20]. Although not direct ranking signals, these principles are critical for ai search engine optimization because AI systems analyze whether content demonstrates genuine value and credibility.

Among these elements, trust functions as the foundation. As Google states: “Trust is the most important member of the E-E-A-T family because untrustworthy pages have low E-E-A-T no matter how Experienced, Expert, or Authoritative they may seem” [20]. For optimal ai seo strategy, ensure your content is factually accurate, transparent, and supported by credible citations.

Author bios and verifiable credentials

AI search engines prioritize content from authors with clear credentials and demonstrated experience [21]. In fact, pages with detailed author information showing expertise signals receive significantly more AI citations. Every blog post should include an author bio detailing education, bar admissions, practice areas, and years of experience [21].

Showcasing credentials involves:

- Including professional certifications and qualifications

- Creating comprehensive bio pages with professional photos

- Highlighting media mentions and speaking engagements

- Using schema markup for author profiles [21]

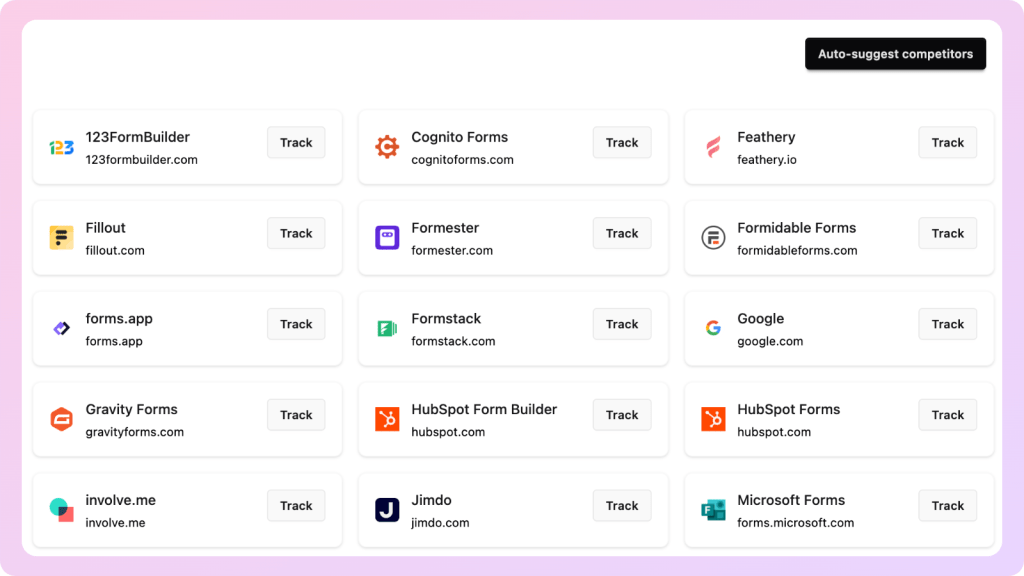

Backlink quality vs. citation frequency in AI results

The rules of visibility have fundamentally changed. AI citations—mentions in AI-generated answers—now carry more weight than traditional backlinks [22]. Research shows AI citations typically generate 3-5x higher conversion rates than backlink traffic, with approximately 10-15% conversion rates compared to 1-3% for traditional backlinks [22].

Brand search volume (not backlinks) functions as the strongest predictor of LLM citations with a 0.334 correlation coefficient [23]. This signals a major shift in ai driven search engine optimization—being recommended by AI assistants can now impact visibility more than acquiring numerous backlinks from authority sites.

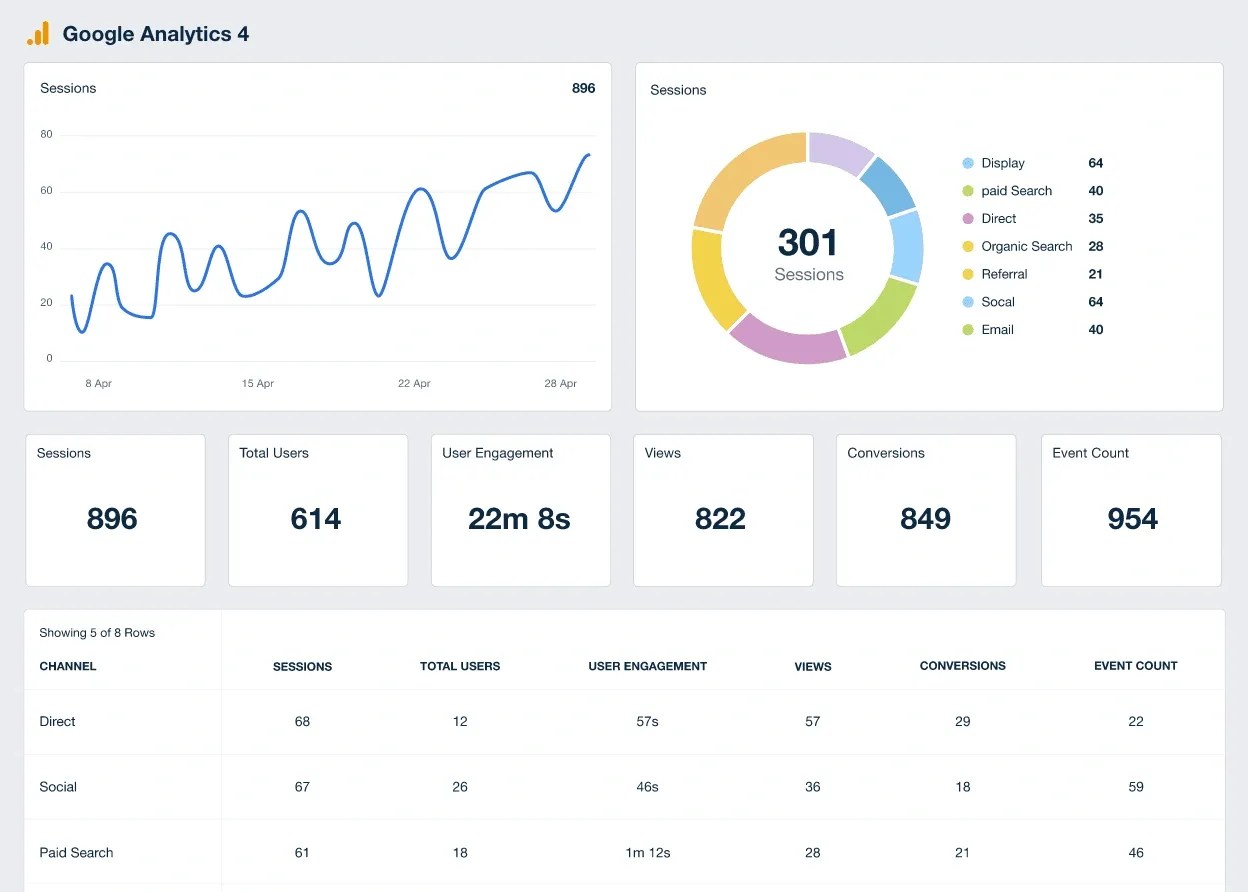

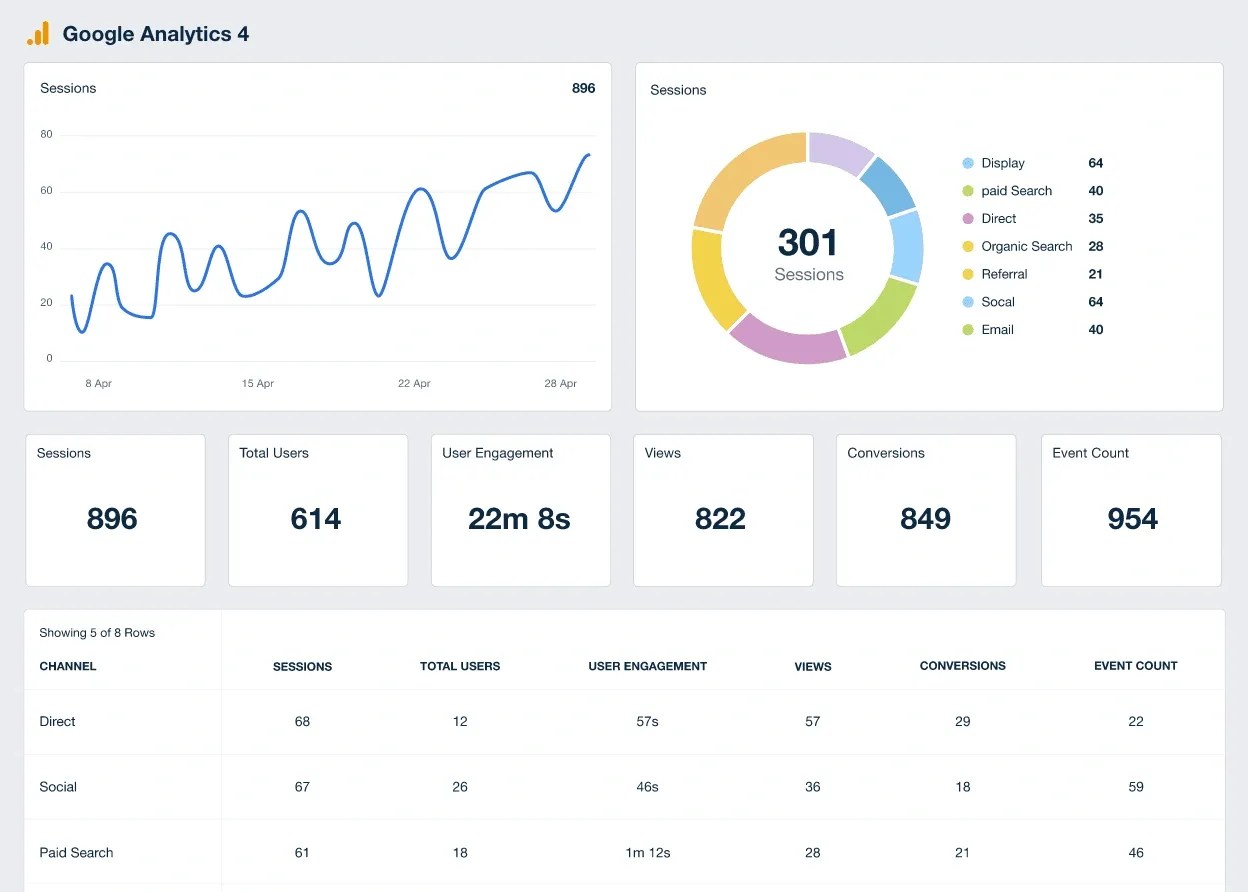

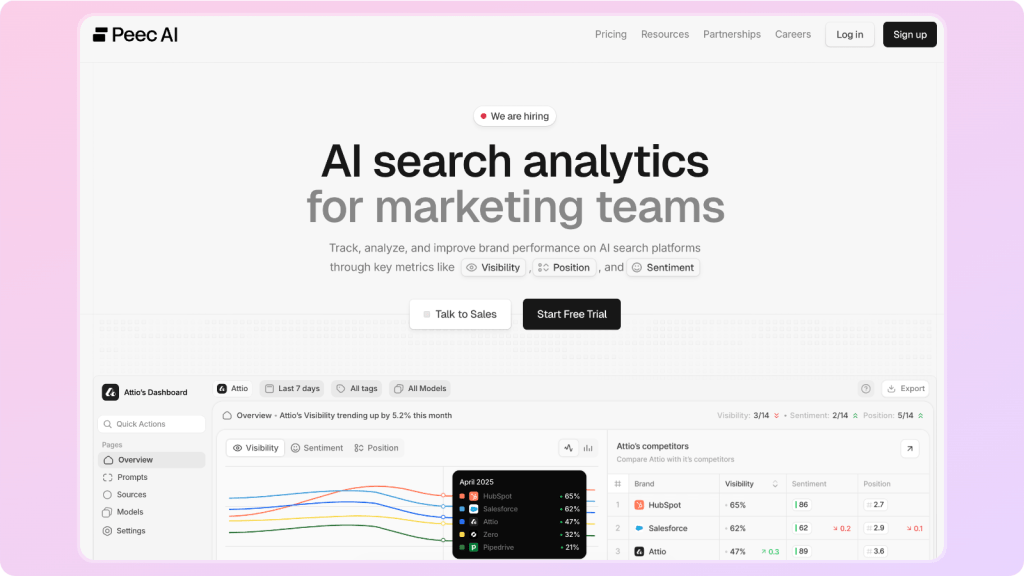

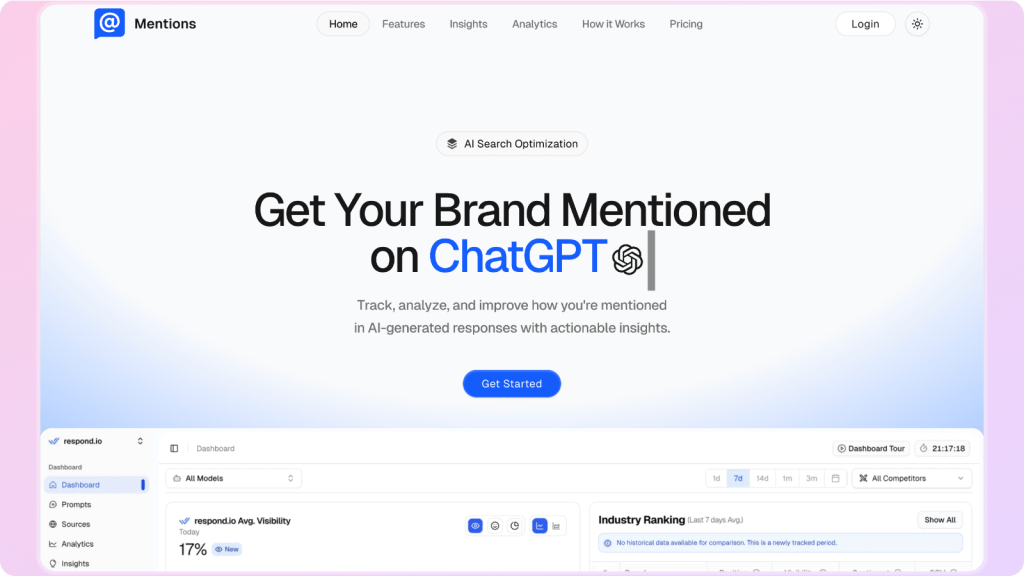

Tools and Metrics to Track AI SEO Performance

Image Source: AgencyAnalytics

Measuring success in the AI search landscape requires specialized tracking tools that traditional analytics platforms simply don’t offer. Proper monitoring is foundational to any effective ai seo strategy.

Using Cartesiano.ai and SERanking AI Results Tracker

Cartesiano.ai stands out as a dedicated AI visibility tracker showing how often your brand appears across major AI platforms. The tool delivers crucial metrics including visibility percentage, brand sentiment (0-100 scale), and average position when mentioned. Meanwhile, SE Ranking’s AI Results Tracker provides complementary capabilities with its “Top 3 Presence” metric showing the share of AI answers where your mentions appear in top positions.

Monitoring featured snippet inclusion

Featured snippets function as prime real estate in AI-driven search. For optimal tracking, filter keywords by “Featured Snippet” in your campaigns to identify opportunities where your site ranks on page one but hasn’t captured the snippet yet [5]. Approximately 45 million of the world’s 362.3 million domains use schema markup—essential for snippet inclusion.

Tracking AI Overviews and voice search visibility

Local Falcon calculates Share of AI Voice (SAIV)—the percentage of map pins where your brand receives mentions [24]. This metric proves particularly valuable for Apromote clients targeting regional markets. For voice optimization, track the 23-word average query length [5] and monitor whether your FAQ-structured content receives citations. Tools like SEOClarity detect brand inaccuracies and hallucinations in real-time [25], ensuring your voice search presence remains accurate.

Conclusion

Traditional SEO approaches will become virtually obsolete by 2026 as AI fundamentally transforms search behavior and content delivery. Throughout this article, we’ve examined how AI referrals have skyrocketed by 357% year-over-year and why 60% of searches now never leave the results page. These statistics clearly indicate an urgent need for businesses to adapt.

The shift from keyword-centric strategies to comprehensive topic coverage represents perhaps the most significant change. Certainly, AI systems prioritize semantic understanding over simple keyword matching, extracting knowledge rather than merely indexing pages. This fundamental difference explains why 52% of sources cited in AI search results don’t even appear on Google’s first page.

Structure now matters more than ever. AI parses content differently, breaking it into modular pieces through extraction methods that identify the most relevant information. Therefore, implementing schema markup, creating clear heading hierarchies, and designing snippable content blocks will boost visibility in AI-generated answers.

We must also recognize that authority signals work differently in AI search. Unlike traditional backlinks, AI citations can generate 3-5x higher conversion rates. Experience, Expertise, Authoritativeness, and Trustworthiness (E-E-A-T) principles form the foundation of credibility, with trust serving as the cornerstone of AI visibility.

Looking ahead, businesses that fail to optimize for AI search will likely see dramatic traffic declines, regardless of their traditional SEO success. Conversely, companies embracing AI-friendly content structures, schema implementation, and authority signals will thrive in this new ecosystem. The future belongs to those who understand that AI doesn’t just change the rules of SEO—it rewrites them entirely.

Finally, tracking the right metrics will determine success. Tools like Cartesiano.ai SERanking AI Results Tracker, and metrics like Share of AI Voice provide essential visibility into your AI search performance. Above all, remember that AI search visitors convert at rates 4.4 times higher than traditional organic traffic, making this shift not just necessary but potentially lucrative for prepared businesses.

References

[1] – https://www.searchenginejournal.com/how-llms-interpret-content-structure-information-for-ai-search/544308/

[2] – https://www.forbes.com/sites/kevinkruse/2025/08/14/seo-is-dead-3-strategies-to-win-in-the-age-of-ai-search/

[3] – https://graph.digital/guides/ai-visibility/pdf-invisibility

[4] – https://help.seranking.com/hc/en-us/articles/16335399186460-How-to-use-AI-Results-Tracker-Rankings

[5] – https://moz.com/blog/identify-featured-snippet-opportunities-next-level

[7] – https://about.ads.microsoft.com/en/blog/post/october-2025/optimizing-your-content-for-inclusion-in-ai-search-answers

[8] – https://docs.kore.ai/xo/searchai/content-extraction/extraction/

[9] – https://developers.google.com/search/docs/appearance/structured-data/intro-structured-data

[10] – https://www.searchenginejournal.com/structured-datas-role-in-ai-and-ai-search-visibility/553175/

[11] – https://www.brightedge.com/blog/structured-data-ai-search-era

[12] – https://www.singlegrain.com/artificial-intelligence/ai-summary-optimization-ensuring-llms-generate-accurate-descriptions-of-your-pages/

[13] – https://vyndow.com/blog/mastering-h1-h2-tags-ai-content-structure/

[14] – https://www.ciphersdigital.com/marketing-guides/ai-seo-2026/

[15] – https://frase.io/blog/faq-schema-ai-search-geo-aeo

[16] – https://www.revvgrowth.com/ai-seo/best-practices-for-ai-visibility-seo

[17] – https://svitla.com/blog/seo-best-practices/

[18] – https://searchengineland.com/guide/voice-search

[19] – https://www.morevisibility.com/search-engine-optimization/insights/ai-in-voice-search-optimizing-for-conversational-queries/

[20] – https://moz.com/learn/seo/google-eat

[21] – https://www.lexiconlegalcontent.com/eeat-for-ai-search-law-firms/

[22] – https://llmseeding.io/blog-post-6-The New Authority: Why Being Cited by AI Matters More Than Backlinks.html

[23] – https://thedigitalbloom.com/learn/2025-ai-citation-llm-visibility-report/

[24] – https://www.localfalcon.com/features/google-ai-overview

[25] – https://visible.seranking.com/blog/best-ai-mode-tracking-tools-2026/

You must be logged in to post a comment.